In this post, we're going to explore building a bot for Slack. Rather than having this bot respond to simple commands, though, let's do something more... fun.

Imagine in your company slack team there's this person (we'll call him Jeff). Everything that Jeff says is patently Jeff. Maybe you've even coined a term amongst your group: a Jeffism. What if you could program a Slack bot that randomly generates messages that were undeniably Jeff?

We're going to do exactly that, and we're going to do it using Markov chains.

Markov Chains

The math Markov pioneered is pretty fascinating: check out this page for a very good explanation and visualization of how they work.

Markov chains can be applied to any dataset that has discrete probabilities for a new state, given the current state. These chains can be applied in computational linguistics to generate random sentences that mimic the author, given a large corpus of text they have written. This is done by selecting a word (the current state) and then selecting the next word (the next state), based on the probability that word B follows word A in our training data. The chain is terminated when punctuation that terminates the sentence is encountered.

Now, if the math is a little fuzzy to you, don't worry. We'll be using a Python library called Markovify that implements these chains, but it's important to understand at least conceptually the math behind it--especially if you want to tune the model.

Let's Get Started

Create a new Python virtual environment and install our two project dependencies: markovify, and slackclient:

mkvirtualenv parrotbot

pip install markovify slackclient

We'll also need to generate two different authentication tokens for Slack. The first is our bot token. It allows our script to connect to slack as our bot user and post messages on its behalf. You can create bot users and tokens at Slack's API site.

This token lets us authenticate as the bot, but we're also going to use the Slack API to search for messages within our team. Bots don't get this privilege (the search.messages API method), so we'll need to authenticate those requests as a standard user. The easiest way to generate a user token for yourself is through the test token generator.

Keep in mind that API calls using this token will be authenticated as you. This means when you query the API for messages, it will return anything you have privileges to see, including private messages with your target user. For this reason, it is smart to format your query to limit its search to public channels ('from:username in:general', for example) to ensure generated Markov chains do not contain private information.

bot.py

This is where we'll keep all of the logic for our bot. We'll go with a very straightforward design: a single application loop that runs when bot.py is called directly.

import markovify

import time

from slackclient import SlackClient

BOT_TOKEN = "insert bot token here"

GROUP_TOKEN = "insert slack group token here"

def main():

"""

Startup logic and the main application loop to monitor Slack events.

"""

# Create the slackclient instance

sc = SlackClient(BOT_TOKEN)

# Connect to slack

if not sc.rtm_connect():

raise Exception("Couldn't connect to slack.")

# Where the magic happens

while True:

# Examine latest events

for slack_event in sc.rtm_read():

# Disregard events that are not messages

if not slack_event.get('type') == "message":

continue

message = slack_event.get("text")

user = slack_event.get("user")

channel = slack_event.get("channel")

if not message or not user:

continue

######

# Commands we're listening for.

######

if "ping" in message.lower():

sc.rtm_send_message(channel, "ping")

# Sleep for half a second

time.sleep(0.5)

if __name__ == '__main__':

main()

The application is basically just a giant while loop. During each iteration of the loop, we examine any events that have been sent across the Slack RTM web socket. If we find an event of type message we grab some key details and then examine the message text to decide if any action should be taken. At the end of each loop iteration, the code sleeps for half a second (precisely 300 eternities in CPU clock time) which keeps this responsive, but leaves your CPU totally idle.

In its current state, the bot only does one thing: It responds to "ping" commands with "pong" on the same channel it received the message. Fire up the script, invite your bot to a channel and test it out.

Building the Markov Chain Generator

If you had all of the text you wanted to seed the generator with laying around, you could toss it in as a string and get straight to business. But we'd like something more responsive; something that can learn over time continually adding new text to the model. We'll accomplish that by seeding the model with messages pulled from Slack's API.

A quick way to store these messages is via JSON. All slack messages have a unique permalink parameter that we can treat as a unique identifier. By using Python's built in JSON parsing module, this can be loaded into a dictionary and new messages will be written idempotently (duplicate messages simply overwrite any previous keys) and simple dictionary operations can give you all the details you need about your text corpus (len(corpus.keys()) is the number of messages, corpus.values() is a list of all text in the corpus, etc).

Take a look at chain.py, which pulls messages from the Slack RTM API, builds a markovify model, and stores messages to disk in a JSON file with some simple data access functions.

Tying it all Together

With the chain builder in place, we can tie this back to the original bot event loop. We can listen for a command and generate a sentence from the Markovify text model. We can also listen for a command and fetch new messages from the Slack API--if new messages are found, we can re-generate the model to use the new content.

Here's the final script:

import json

import markovify

import re

import time

from slackclient import SlackClient

BOT_TOKEN = "bot user API token"

GROUP_TOKEN = "slack user API token"

MESSAGE_QUERY = "from:username_to_parrot"

MESSAGE_PAGE_SIZE = 100

DEBUG = True

def _load_db():

"""

Reads 'database' from a JSON file on disk.

Returns a dictionary keyed by unique message permalinks.

"""

try:

with open('message_db.json', 'r') as json_file:

messages = json.loads(json_file.read())

except IOError:

with open('message_db.json', 'w') as json_file:

json_file.write('{}')

messages = {}

return messages

def _store_db(obj):

"""

Takes a dictionary keyed by unique message permalinks and writes it to the JSON 'database' on

disk.

"""

with open('message_db.json', 'w') as json_file:

json_file.write(json.dumps(obj))

return True

def _query_messages(client, page=1):

"""

Convenience method for querying messages from Slack API.

"""

if DEBUG:

print "requesting page {}".format(page)

return client.api_call('search.messages', query=MESSAGE_QUERY, count=MESSAGE_PAGE_SIZE, page=page)

def _add_messages(message_db, new_messages):

"""

Search through an API response and add all messages to the 'database' dictionary.

Returns updated dictionary.

"""

for match in new_messages['messages']['matches']:

message_db[match['permalink']] = match['text']

return message_db

# get all messages, build a giant text corpus

def build_text_model():

"""

Read the latest 'database' off disk and build a new markov chain generator model.

Returns TextModel.

"""

if DEBUG:

print "Building new model..."

messages = _load_db()

return markovify.Text(" ".join(messages.values()), state_size=2)

def format_message(original):

"""

Do any formatting necessary to markon chains before relaying to Slack.

"""

if original is None:

return

# Clear <> from urls

cleaned_message = re.sub(r'<(htt.*)>', '\1', original)

return cleaned_message

def update_corpus(sc, channel):

"""

Queries for new messages and adds them to the 'database' object if new ones are found.

Reports back to the channel where the update was requested on status.

"""

sc.rtm_send_message(channel, "Leveling up...")

# Messages will get queried by a different auth token

# So we'll temporarily instantiate a new client with that token

group_sc = SlackClient(GROUP_TOKEN)

# Load the current database

messages_db = _load_db()

starting_count = len(messages_db.keys())

# Get first page of messages

new_messages = _query_messages(group_sc)

total_pages = new_messages['messages']['paging']['pages']

# store new messages

messages_db = _add_messages(messages_db, new_messages)

# If any subsequent pages are present, get those too

if total_pages > 1:

for page in range(2, total_pages + 1):

new_messages = _query_messages(group_sc, page=page)

messages_db = _add_messages(messages_db, new_messages)

# See if any new keys were added

final_count = len(messages_db.keys())

new_message_count = final_count - starting_count

# If the count went up, save the new 'database' to disk, report the stats.

if final_count > starting_count:

# Write to disk since there is new data.

_store_db(messages_db)

sc.rtm_send_message(channel, "I have been imbued with the power of {} new messages!".format(

new_message_count

))

else:

sc.rtm_send_message(channel, "No new messages found :(")

if DEBUG:

print("Start: {}".format(starting_count), "Final: {}".format(final_count),

"New: {}".format(new_message_count))

# Make sure we close any sockets to the other group.

del group_sc

return new_message_count

def main():

"""

Startup logic and the main application loop to monitor Slack events.

"""

# build the text model

model = build_text_model()

# Create the slackclient instance

sc = SlackClient(BOT_TOKEN)

# Connect to slack

if not sc.rtm_connect():

raise Exception("Couldn't connect to slack.")

# Where the magic happens

while True:

# Examine latest events

for slack_event in sc.rtm_read():

# Disregard events that are not messages

if not slack_event.get('type') == "message":

continue

message = slack_event.get("text")

user = slack_event.get("user")

channel = slack_event.get("channel")

if not message or not user:

continue

######

# Commands we're listening for.

######

if "parrot me" in message.lower():

markov_chain = model.make_sentence()

sc.rtm_send_message(channel, format_message(markov_chain))

if "level up parrot" in message.lower():

# Fetch new messages. If new ones are found, rebuild the text model

if update_corpus(sc, channel) > 0:

model = build_text_model()

# Sleep for half a second

time.sleep(0.5)

if __name__ == '__main__':

main()

There are a few things that can be tuned from this point. Updating the format_message method can apply any formatting on the outbound sentence generated by the bot. New commands can be listened for in the function main. The method build_text_model can be updated to more finely tune the Markov chain generator with more advanced usage of markovify.

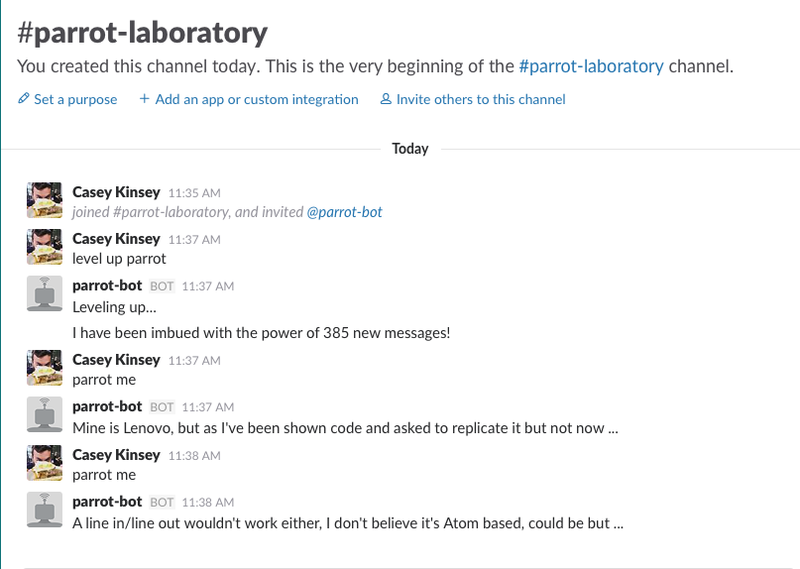

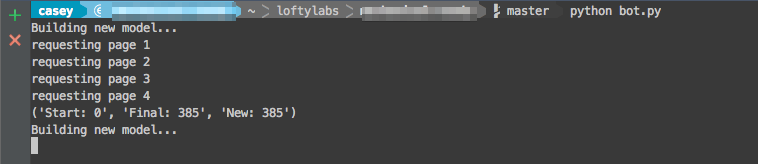

When you run the bot and issue some commands, you should see some debug output in your terminal and some updates in slack. And, of course, when you request of the bot, "parrot me", you get a Markov chain generated from your corpus! (Be sure to invite your bot user to the channel you're testing it in).

One interesting direction to take this would be to allow the bot to segment messages from different users (by adding a higher level of nesting to the JSON database keyed by user). You could then build text models on a per user basis and instruct your bot to generate sentences for specific users by referencing their name in a command.

Markov chains have many (much more practical) applications in computational linguistics and general computer science / statistical modeling, but this has been a fun way to apply them here at Lofty Labs to get our team interested in the math behind them.

.jpg)